Cluster-Only Configuration Items

Features and configuration options specific to clustered environments

6 minute read

A cluster is a pair of nodes that share configuration and an active/standby relationship, providing automated high-availability (HA) connectivity.

A cluster is a pair of nodes at a single site that share some configurations and provide automatic failover. An additional IP address is assigned as a Cluster Virtual IP address that can move between the nodes if failover occurs.

Certain settings such as network services and VPN settings can be configured for the cluster and these settings will override the individual node’s configuration.

The active member of a cluster is determined by the following factors:

Cluster members utilize a direct TCP connection to each other to determine if their partner is online and share their health status. Each node will listen on a configured heartbeat IP and port, while at the same time connecting to their partner’s configured heartbeat IP and port.

Heartbeat communication is configured on each node’s cluster page.

A cluster can be configured in two different modes to determine what happens when a failed member returns to healthy status:

Consider a cluster with members named Node1, the configured active, and Node2.

| Event | Automatic Failback - Active Member | Manual Failback - Active Member |

|---|---|---|

| Initial State | Node1 | Node1 |

| Node1 unhealthy/offline | Node2 | Node2 |

| Node1 returns to healthy/online | Node1 | Node2 |

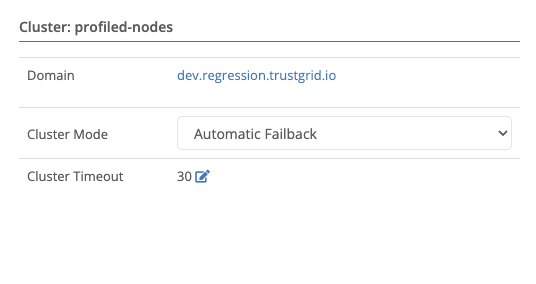

The cluster will wait a configurable amount of time before considering a failed member as lost. This timeout is configurable on the cluster page.

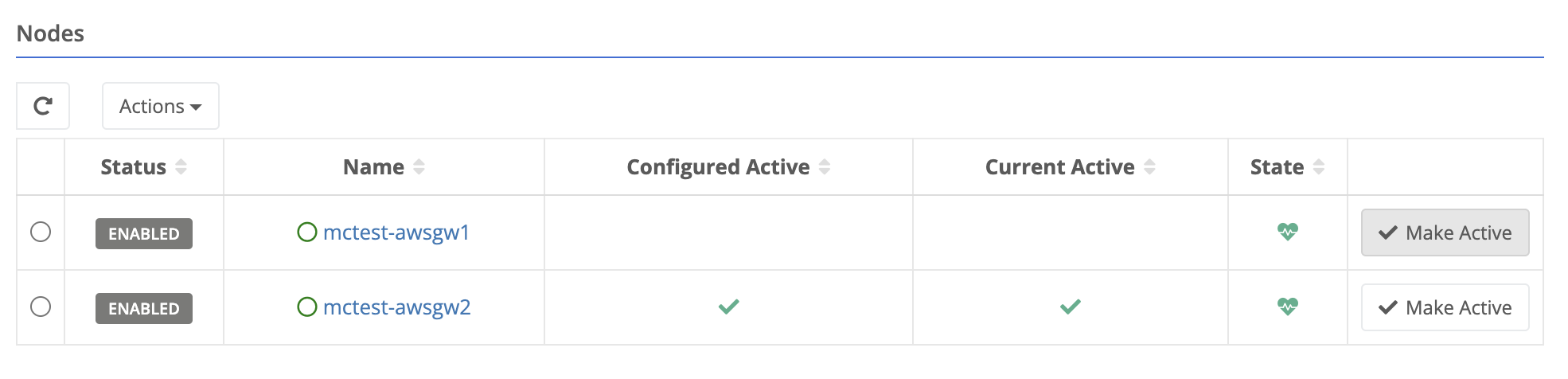

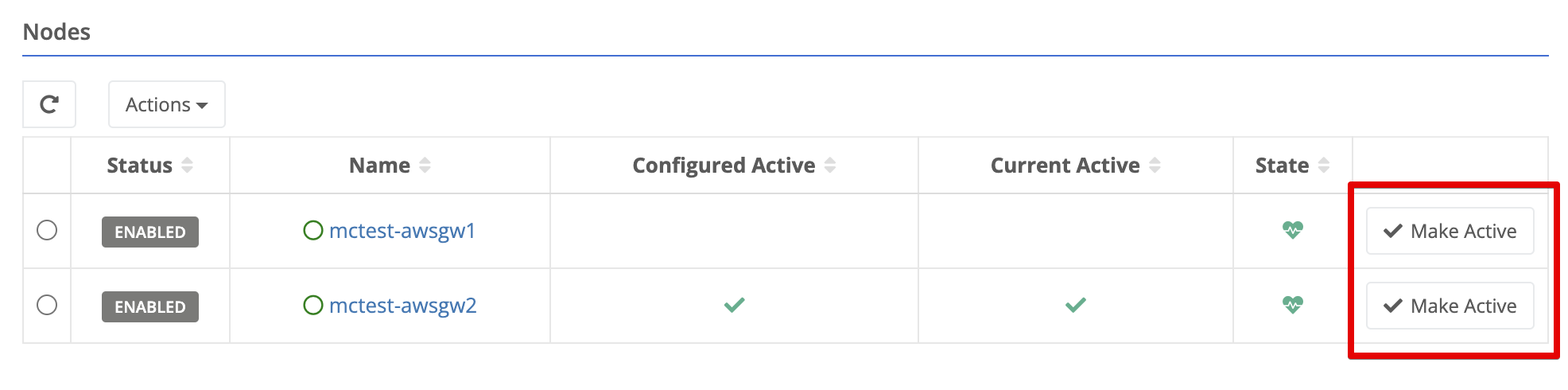

Each cluster will have one configured or preferred active member. This is reflected in the overview section.

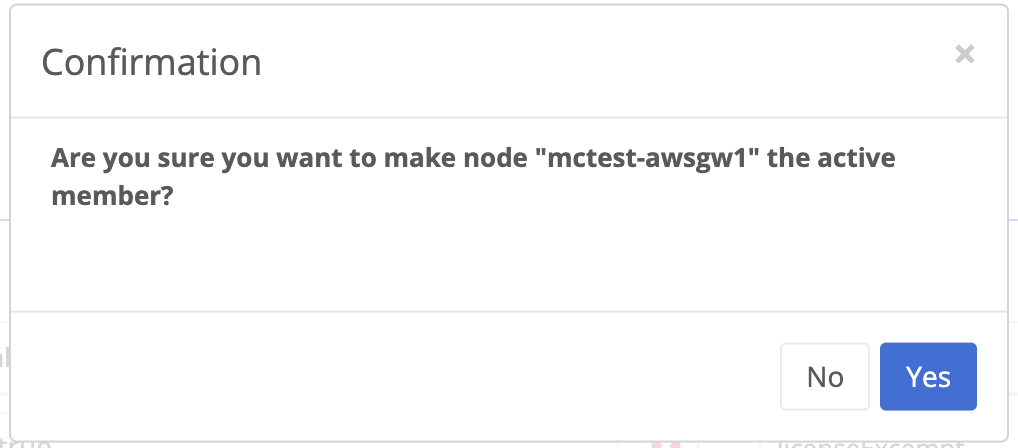

To change the configured active member:

There may be situations where both cluster members are online and can communicate with each other, but external conditions exist that make a node unsuitable to hold the active role. The Trustgrid node service monitors for such conditions and will make a node as unhealthy if one occurs. The node will release the active role and its standby member will take over if it is online and healthy.

When the condition clears the node will declare itself healthy and inform its partner member. Depending on the cluster mode it may reclaim the active role.

Loss of cluster heartbeat communication - If a node cannot communicate with its partner nodes on the configured IP and port it will declare that partner node unhealthy and claim the active role if it has not already.

Interface Link (Up/Down) State - Any interface configured with an IP address in the Trustgrid is monitored for a successful connection to another network device

Upstream Internet Issues - If a Trustgrid node is unable to build connections to both the Trustgrid control plane AND data plane connections to its gateways the node will be marked as unhealthy. This does require all the connections to be failing before it is triggered

WAN Interface DHCP failure - If the WAN interface is configured to use DHCP and it does not receive a DHCP lease it will mark itself unhealthy.

Layer 4 (L4 Proxy) Service Health Check - TCP L4 Proxy Services can be configured to regularly perform health checks to confirm a successful connection can be made. If these checks fail 5 times in a row the service will mark the cluster member as unhealthy.

Cluster members can share the configuration for the following services:

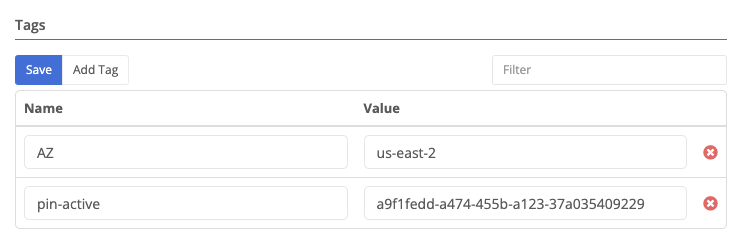

Tags are visible at the bottom of the overview page for the resource.

To add a tag:

Click Add Tag.

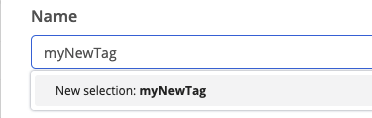

A new row will appear at the bottom of the tags table. There will be a list of existing tag names for your organization. You can filter the list by typing in the field. You can either select an existing tag name, or create a new one by typing it out in full and then selecting New selection: tagName.

Next move to the values field. As with the name, existing values will be listed. To enter a new value type it in completely.

Click Save

Tag rows can be edited in-place. Change the name or value, then click Save.

To remove a tag, click the red X next to the tag name, then click Save.

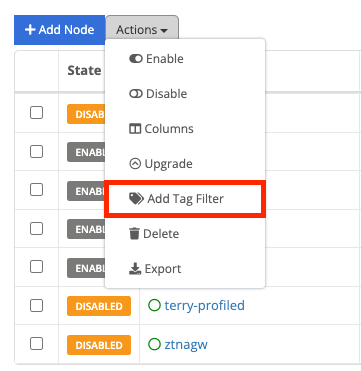

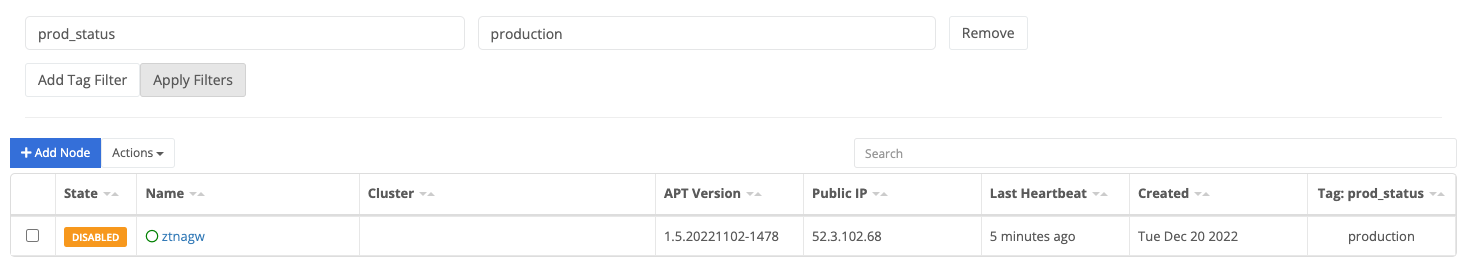

The clusters table can also be filtered to only show clusters with a specific tag name:value.

Actions and select Add Tag Filter from the drop-down menu.

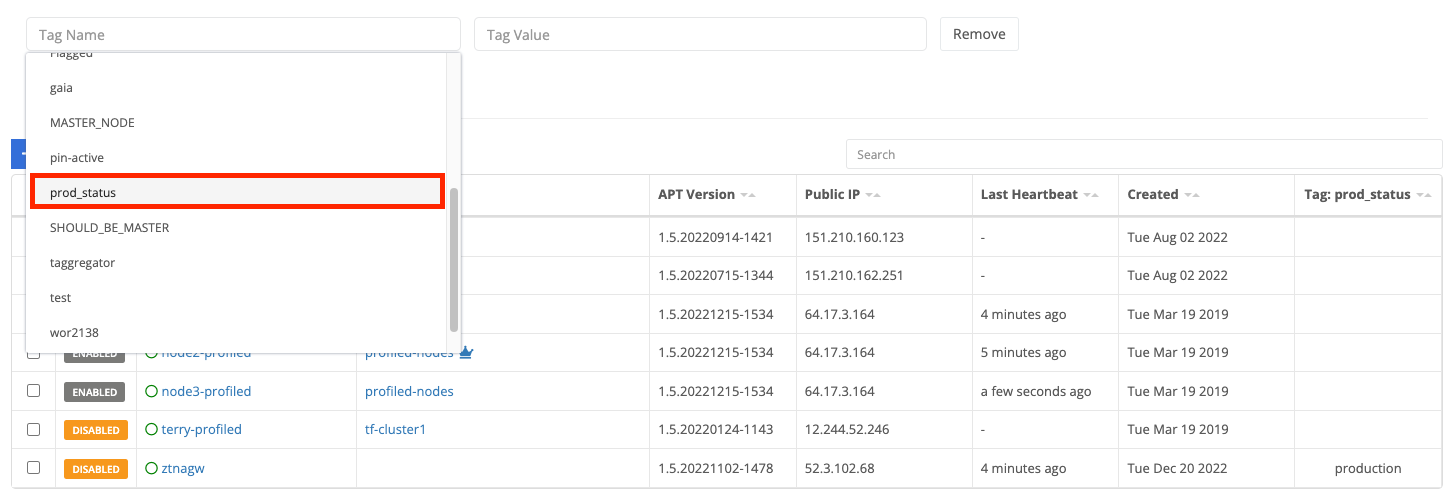

Add Tag Filter, select the tag-name field and you will see a list of tag-names available. Select the desired tag.

You can also start typing to filter what tag names are shown.

Select the tag value field and you will see a list of available values. Select the desired value.

(Optional) Click Add Tag Filter to include an additional filter. Note that the two filters will be applied using AND only clusters with both tag name:value combinations matching will be shown.

Click Apply Tag Filter and the table will only show matching clusters.

Features and configuration options specific to clustered environments

Was this page helpful?

Glad to hear it! Please tell us how we can improve.

Sorry to hear that. Please tell us how we can improve.